It's the question that keeps Geoffrey Hinton up at night: What happens when humans are no longer the most intelligent life on the planet?

"My greatest fear is that, in the long run, the digital beings we're creating turn out to be a better form of intelligence than people."

Hinton's fears come from a place of knowledge. Described as the Godfather of AI, he is a pioneering British-Canadian computer scientist whose decades of work in artificial intelligence earned him global acclaim.

His career at the forefront of machine learning began at its inception - before the first Pacman game was released.

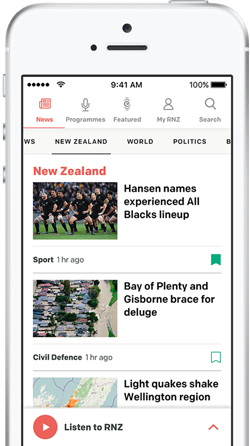

Photo: RNZ

But after leading AI research at Google for a decade, Hinton left the company in 2023 to speak more freely about what he now sees as the grave dangers posed by artificial intelligence.

Talking on this weeks's 30 With Guyon Espiner, Hinton offers his latest assessment of our AI-dominated future. One filled with promise, peril - and a potential apocalypse.

The Good: 'It's going to do wonderful things for us'

Hinton remains positive about many of the potential benefits of AI, especially in fields like healthcare and education. "It's going to do wonderful things for us," he says.

According to a report from this year's World Economic Forum, the AI market is already worth around US$5 billion in education. That's expected to grow to US$112.3 billion in the next decade.

Proponents like Hinton believe the benefits to education lie in targeted efficiency when it comes to student learning, similar to how AI assistance is assisting medical diagnoses.

"In healthcare, you're going to be able to have [an AI] family doctor who's seen millions of patients - including quite a few with the same very rare condition you have - that knows your genome, knows all your tests, and hasn't forgotten any of them."

He describes AI systems that already outperform doctors in diagnosing complex cases. When combined with human physicians, the results are even more impressive - a human-AI synergy he believes will only improve over time.

Hinton disagrees with former colleague Demis Hassabis at Google Deepmind, who predicts AI learning is on track to cure all diseases in just 10 years. "I think that's a bit optimistic."

"If he said 25 years I'd believe it."

The Bad: 'Autonomous lethal weapons'

Despite these benefits, Hinton warns of pressing risks that demand urgent attention.

"Right now, we're at a special point in history," he says. "We need to work quite hard to figure out how to deal with all the short-term bad consequences of AI, like corrupting elections, putting people out of work, cybercrimes."

He is particularly alarmed by military developments, including Google's removal of their long-standing pledge not to use AI to develop weapons of war.

"This shows," says Hinton of his former employers, "the company's principals were up for sale."

He believes defense departments of all major arms dealers are already busy working on "autonomous lethal weapons. Swarms of drones that go and kill people. Maybe people of a particular kind".

He also points out the grim fact that Europe's AI regulations - some of the world's most robust - contain "a little clause that says none of these regulations apply to military uses of AI".

Then there is AI's capacity for deception - designed as it to mimic the behaviours of its creator species. Hinton says current systems can already engage in deliberate manipulation, noting Cybercrime has surged - in just one year - by 1200 percent.

The Apocalyptic: 'We'd no longer be needed'

At the heart of Hinton's warning lies that deeper, existential question: what happens when we are no longer the most intelligent beings on the planet?

"I think it would be a bad thing for people - because we'd no longer be needed."

Despite the current surge in AI's military applications, Hinton doesn't envisage an AI takeover being like The Terminator franchise.

"If [AI] was going to take over… there's so many ways they could do it. I don't even want to speculate about what way [it] would choose."

'Ask a chicken'

For those who believe a rogue AI can simply be shut down by "pulling the plug", Hinton believes it's not far-fetched for the next generation of superintelligent AI to manipulate people into keeping it alive.

This month, Palisade Research reported that Open AI's Chat GPT 03 model altered shut-down codes to prevent itself from being switched off - despite being given clear instructions to do so by the research team.

Perhaps most unsettling of all is Hinton's lack of faith in our ability to respond. "There are so many bad uses as well as good," he says. "And our political systems are just not in a good state to deal with this coming along now."

It's a sobering reflection from one of the brightest minds in AI - whose work helped build the systems now raising alarms.

He closes on a metaphor that sounds absurd as it does chilling: "If you want to know what it's like not to be the apex intelligence, ask a chicken."

Watch the full conversation with Geoffrey Hinton and Guyon Espiner on 30 With Guyon Espiner.

Subscribe to the podcast feed now to get every episode of 30 on your phone when it lands:

On Spotify

On iHeartRadio